Table of Contents

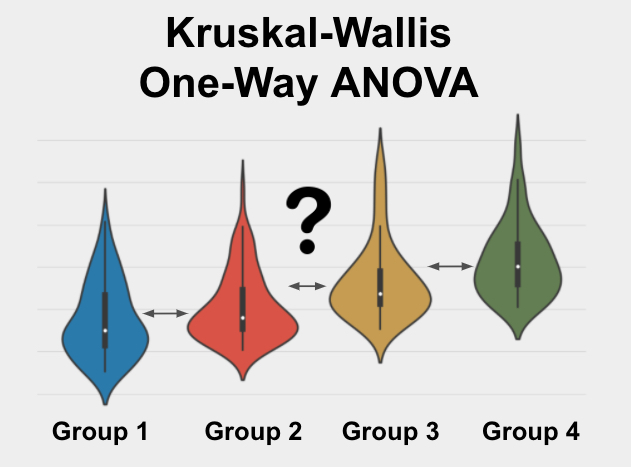

Kruskal-Wallis One-Way ANOVA is a non-parametric statistical test used to determine if there are significant differences between three or more independent groups. It is an extension of the Mann-Whitney U test and is used when the assumptions of a traditional One-Way ANOVA cannot be met. This test ranks the values within each group and calculates a test statistic, which is compared to a critical value to determine if there are significant differences between the groups. It is commonly used in research studies to compare the medians of different groups and is particularly useful when the data is not normally distributed. This test is named after its developers, William Kruskal and Wilson Wallis, and is a powerful tool for analyzing data with multiple groups.

What is the Kruskal-Wallis One-Way ANOVA?

The Kruskal-WallisOne-Way ANOVA is a statistical test used to determine if 3 or more groups are significantly different from each other on your variable of interest. Your variable of interest should be continuous, can be skewed, and have a similar spread across your groups. Your groups should be independent (not related to each other) and you should have enough data (more than 5 values in each group).

The Kruskal-Wallis One-Way ANOVA is also sometimes called the One-Way ANOVA on Ranks, Kruskal-Wallis One-Way Analysis of Variance, Kruskal-Wallis H Test, and the Kruskal-Wallis Test.

Assumptions for the Kruskal-Wallis One-Way ANOVA

Every statistical method has assumptions. Assumptions mean that your data must satisfy certain properties in order for statistical method results to be accurate.

The assumptions for the Kruskal-Wallis One-Way ANOVA include:

- Continuous

- Random Sample

- Enough Data

- Similar Spread Across Groups

- Similar Shape Between Groups

Let’s dive in to each one of these separately.

Continuous

The variable that you care about (and want to see if it is different across the 3+ groups) must be continuous. Continuous means that the variable can take on any reasonable value.

Some good examples of continuous variables include age, weight, height, test scores, survey scores, yearly salary, etc.

Random Sample

The data points for each group in your analysis must have come from a simple random sample. This means that if you wanted to see if drinking sugary soda makes you gain weight, you would need to randomly select a group of soda drinkers for your soda drinker group.

The key here is that the data points for each group were randomly selected. This is important because if your groups were not randomly determined then your analysis will be incorrect. In statistical terms this is called bias, or a tendency to have incorrect results because of bad data.

If you do not have a random sample, the conclusions you can draw from your results are very limited. You should try to get a simple random sample.If you have paired samples (2 or more measurements from the same groups of subjects) then you should use a Friedman Test instead.

Enough Data

The sample size (or data set size) should be greater than 5 in each group. Some people argue for more, but more than 5 is probably sufficient.

The sample size also depends on the expected size of the difference across groups. If you expect a large difference across groups, then you can get away with a smaller sample size. If you expect a small difference across groups, then you likely need a larger sample.

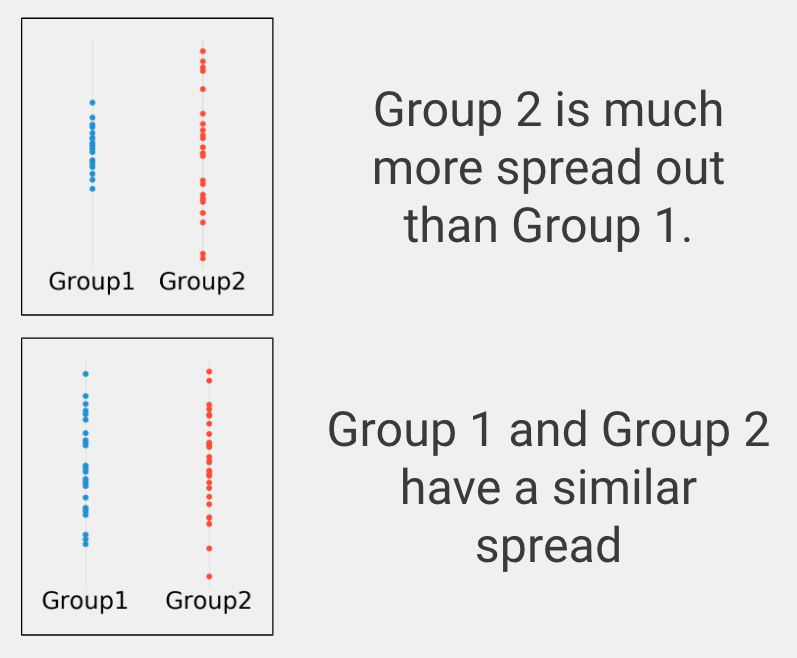

Similar Spread Across Groups

In statistics this is called homogeneity of variance, or making sure the variables take on reasonably similar values. To examine this assumption, you can plot your data as in the figure below and see if the groups are similarly spread on your variable of interest.

If your groups have a substantially different spread on your variable of interest, then you might be able to use a Welch test ANOVA variant instead.

Similar Shape Across Groups

In order to say that your groups are different based on their average (or median in this case), your groups must be similarly shaped when you graph them as histograms. If the histograms are similarly shaped, you can say the medians (or averages) are different if the Kruskal-Wallis test is significant.

If your groups are not similarly shaped, then you can talk about the difference between the groups in your results, but you cannot argue for a difference in average value (or median).

When to use the Kruskal-Wallis One-Way ANOVA?

You should use the Kruskal-Wallis One-Way ANOVA in the following scenario:

- You want to know if many groups are different on your variable of interest

- Your variable of interest is continuous

- You have 3 or more groups

- You have independent samples

- You can have a skewed variable of interest

Let’s clarify these to help you know when to use the Kruskal-Wallis One-Way ANOVA.

Difference

You are looking for a statistical test to see whether three or more groups are significantly different on your variable of interest. This is a difference question. Other types of analyses include examining the relationship between two variables (correlation) or predicting one variable using another variable (prediction).

Continuous Data

Your variable of interest must be continuous. Continuous means that your variable of interest can basically take on any value, such as heart rate, height, weight, number of ice cream bars you can eat in 1 minute, etc.

Types of data that are NOT continuous include ordered data (such as finishing place in a race, best business rankings, etc.), categorical data (gender, eye color, race, etc.), or binary data (purchased the product or not, has the disease or not, etc.).

Three or more Groups

The Kruskal-Wallis One-Way ANOVA can be used to compare three or more groups on your variable of interest.

If you have only two groups, you should use the Mann-Whitney U Test instead. If you only have one group and you would like to compare your group to a known or hypothesized population value, you should use the Single Sample Wilcoxon Signed-Rank Test instead.

Independent Samples

Independent samples means that your groups are not related in any way. For example, if you randomly sample men and then separately randomly sample women to get their heights, the groups should not be related.

If you get multiple groups of students to take a pre-test and those same students to take a post-test, you have two different variables for the same groups of students, which would be paired data, in which case you would need to use the Friedman Test instead.

Skewed Variable of Interest

Your data do not need to be normally distributed (shaped like a bell curve) to perform the Kruskall-Wallis One-Way ANOVA.

Kruskall-Wallis One-Way ANOVA Example

Group 1: Received medical treatment #1.

Group 2: Received medical treatment #2.

Group 3: Received a placebo or control condition.

Variable of interest: Time in days to recover from a disease.

In this example we have three independent groups and one continuous variable of interest, so we expect to perform a One-Way ANOVA. We investigate our data and discover that our variable of interest (time to recover) is positively skewed. Because of this, we decide to use the Kruskal-Wallis One-Way ANOVA.

The null hypothesis, which is statistical lingo for what would happen if the treatments do nothing, is that none of the three groups have different recovery times on average. We are trying to determine if receiving either of the medical treatments will shorten the number of days it takes for patients to recover from the disease.

After the experiment is over, we compare the three groups on our variable of interest (days to fully recover) using a Kruskall-Wallis One-Way ANOVA. When we run the analysis, we get a chi-square statistic and a p-value. The chi-square statistic is a measure of how different the three groups are on our recovery-time variable of interest.

A p-value is the chance of seeing our results assuming neither of the treatments actually change recovery time. A p-value less than or equal to 0.05 means that our result is statistically significant and we can trust that the difference is not due to chance alone.

If the chi-square statistic is high and the p-value is low, it means that the recovery time was significantly different in at least one of the groups. Further investigation is required to determine the which group(s) was significantly higher/lower than the others.