Table of Contents

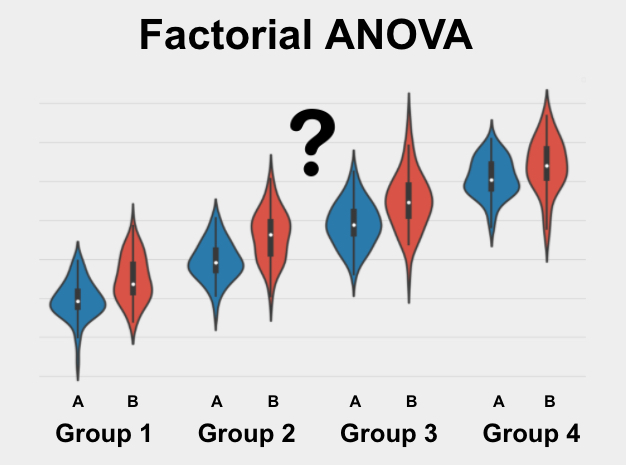

Factorial ANOVA (Analysis of Variance) is a statistical method used to analyze the effects of two or more independent variables on a dependent variable. It is a type of ANOVA that examines the interaction between multiple independent variables and their combined effect on the dependent variable. This method is commonly used in research and experiments to understand the relationship between different variables and their impact on the outcome. It allows researchers to determine whether there is a significant difference between the means of different groups and to identify any potential interactions between the variables. Overall, Factorial ANOVA provides a comprehensive analysis of the relationship between multiple factors and their influence on a particular outcome.

What is a Factorial ANOVA?

The Factorial ANOVA is a statistical test used to determine if two or more sets of groups are significantly different from each other on your variable of interest. Your variable of interest should be continuous, be normally distributed, and have a similar spread across your groups. In addition, you should have enough data (more than 5 values in each group).

The Factorial ANOVA is also sometimes called the Two-Way ANOVA (special case), the Factorial ANOVA F-Test, or Factorial Analysis of Variance.

Assumptions for the Factorial ANOVA

Every statistical method has assumptions. Assumptions mean that your data must satisfy certain properties in order for statistical method results to be accurate.

The assumptions for the Factorial ANOVA include:

- Continuous

- Normally Distributed

- Random Sample

- Enough Data

- Similar Spread Across Groups

Let’s dive in to each one of these separately.

Continuous

The variable that you care about (and want to see if it is different across the 3+ groups) must be continuous. Continuous means that the variable can take on any reasonable value.

Some good examples of continuous variables include age, weight, height, test scores, survey scores, yearly salary, etc.

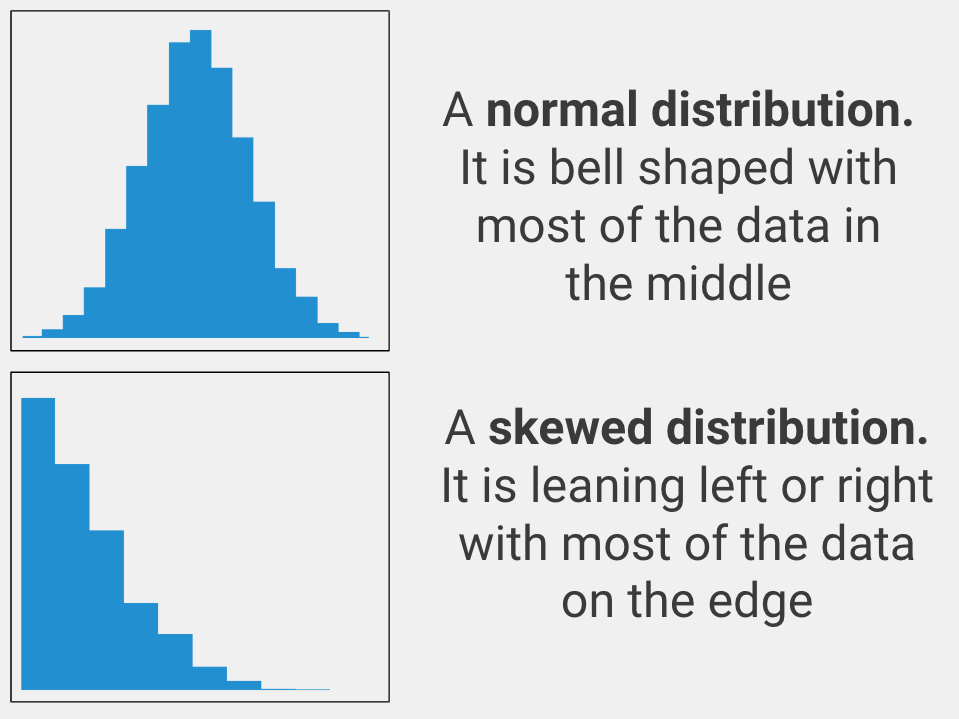

Normally Distributed

The variable that you care about must be spread out in a normal way. In statistics, this is called being normally distributed (aka it must look like a bell curve when you graph the data). Only use a Factorial ANOVA with your data if the variable you care about is normally distributed.

If your variable is not normally distributed, you should use the Kruskal-Wallis One-Way ANOVA or the Friedman Test instead.

Random Sample

The data points for each group in your analysis must have come from a simple random sample. This means that if you wanted to see if drinking sugary soda makes you gain weight, you would need to randomly select a group of soda drinkers for your soda drinker group.

The key here is that the data points for each group were randomly selected. This is important because if your groups were not randomly determined then your analysis will be incorrect. In statistical terms this is called bias, or a tendency to have incorrect results because of bad data.

If you do not have a random sample, the conclusions you can draw from your results are limited. You should try to get a simple random sample.

Enough Data

The sample size (or data set size) should be greater than 5 in each group. Some people argue for more, but more than 5 is probably sufficient.

The sample size also depends on the expected size of the difference across groups. If you expect a large difference across groups, then you can get away with a smaller sample size. If you expect a small difference across groups, then you likely need a larger sample.

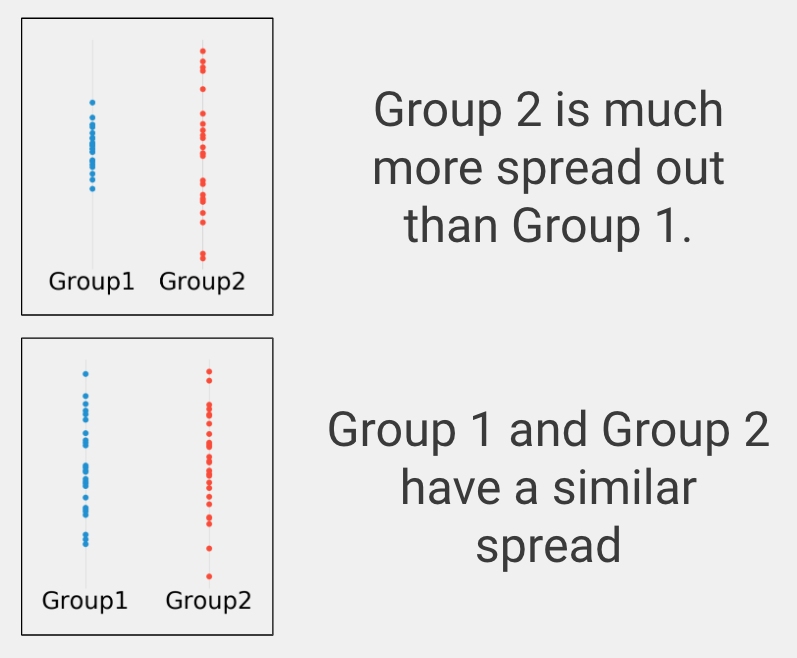

Similar Spread Across Groups

In statistics this is called homogeneity of variance, or making sure the variables take on reasonably similar values. To examine this assumption, you can plot your data as in the figure below and see if the groups are similarly spread on your variable of interest.

When to use a Factorial ANOVA?

You should use a Factorial ANOVA in the following scenario:

- You want to know if many groups are different on your variable of interest

- Your variable of interest is continuous

- You have 2 or more sets of groups

- You have a normal variable of interest

Let’s clarify these to help you know when to use a Factorial ANOVA.

Difference

You are looking for a statistical test to see whether three or more groups are significantly different on your variable of interest. This is a difference question. Other types of analyses include examining the relationship between two variables (correlation) or predicting one variable using another variable (prediction).

Continuous Data

Your variable of interest must be continuous. Continuous means that your variable of interest can basically take on any value, such as heart rate, height, weight, number of ice cream bars you can eat in 1 minute, etc.

Types of data that are NOT continuous include ordered data (such as finishing place in a race, best business rankings, etc.), categorical data (gender, eye color, race, etc.), or binary data (purchased the product or not, has the disease or not, etc.).

Two or more sets of Groups

A Factorial ANOVA can be used to compare two or more sets of groups on your variable of interest. For instance, if you have a treatment and control group each with pre- and post-treatment data, then you have a 2×2 Factorial ANOVA design.

If you only want to compare two groups, you should use an Independent Samples T-Test analysis instead. If you only have one group and you would like to compare your group to a known or hypothesized population value, you should use a Single Sample T-Test instead.

Normal Variable of Interest

Normality was discussed earlier on this page and simply means your plotted data is bell shaped with most of the data in the middle. If you actually would like to prove that your data is normal, you can use the Kolmogorov-Smirnov test or the Shapiro-Wilk test.

Factorial ANOVA Example

Treatment variable 1: Received medical treatment #1.

Treatment variable 2: Received medical treatment #2.

Time variable 1: Pre-treatment

Time variable 2: Post-treatment

Variable of interest: Cholesterol levels.

In this example we have two sets of two groups. In other words, we have two grouping variables (treatment type and time of measurement) with two factors each. After confirming that our data meet the assumptions of the Factorial ANOVA, we proceed with the analysis.

The null hypothesis, which is statistical lingo for what would happen if the treatments do nothing, is that none of the medical treatments will affect cholesterol levels across the two time periods. We are trying to determine if receiving either of the medical treatments will lower cholesterol levels from pre- to post-treatment.

After the experiment is over, we compare the two treatment groups across the two time periods on our variable of interest (cholesterol levels) using a Factorial ANOVA. When we run the analysis, we get some F-statistics and p-values. In this example, we are most interested in the F-statistic and p-value associated with the interaction of time x treatment. This interaction effect asks the question: “Did the two treatment groups’ cholesterol levels change differently over time?”

The p-value for this effect is the chance of seeing our results assuming the treatments don’t actually change cholesterol levels differently. A p-value less than or equal to 0.05 means that our result is statistically significant and we can trust that the difference is not due to chance alone.