Table of Contents

Quadratic regression is a statistical technique used to model the relationship between a dependent variable and one or more independent variables, assuming a quadratic (curved) relationship between them. In R, quadratic regression can be performed by using the “lm” function, which fits a linear regression model, and then transforming the independent variable(s) to their quadratic form using the “poly” function. This allows for the inclusion of quadratic terms in the regression model, which can provide a better fit to data that exhibits a curved relationship. The resulting model can then be evaluated and analyzed to make predictions and draw conclusions about the relationship between the variables.

Perform Quadratic Regression in R

When two variables have a linear relationship, we can often use simple linear regression to quantify their relationship.

However, when two variables have a quadratic relationship, we can instead use quadratic regression to quantify their relationship.

This tutorial explains how to perform quadratic regression in R.

Example: Quadratic Regression in R

Suppose we are interested in understanding the relationship between number of hours worked and reported happiness. We have the following data on the number of hours worked per week and the reported happiness level (on a scale of 0-100) for 11 different people:

Use the following steps to fit a quadratic regression model in R.

Step 1: Input the data.

First, we’ll create a data frame that contains our data:

#create data data <- data.frame(hours=c(6, 9, 12, 14, 30, 35, 40, 47, 51, 55, 60), happiness=c(14, 28, 50, 70, 89, 94, 90, 75, 59, 44, 27)) #view data data hours happiness 1 6 14 2 9 28 3 12 50 4 14 70 5 30 89 6 35 94 7 40 90 8 47 75 9 51 59 10 55 44 11 60 27

Step 2: Visualize the data.

Next, we’ll create a simple scatterplot to visualize the data.

#create scatterplot

plot(data$hours, data$happiness, pch=16)

We can clearly see that the data does not follow a linear pattern.

Next, we will fit a simple linear regression model to see how well it fits the data:

#fit linear model linearModel <- lm(happiness ~ hours, data=data) #view model summary summary(linearModel) Call: lm(formula = happiness ~ hours) Residuals: Min 1Q Median 3Q Max -39.34 -21.99 -2.03 23.50 35.11 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 48.4531 17.3288 2.796 0.0208 * hours 0.2981 0.4599 0.648 0.5331 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 28.72 on 9 degrees of freedom Multiple R-squared: 0.0446, Adjusted R-squared: -0.06156 F-statistic: 0.4201 on 1 and 9 DF, p-value: 0.5331

The total variance in happiness explained by the model is just 4.46%, as shown by the value for Multiple R-squared.

Step 4: Fit a quadratic regression model.

Next, we will fit a quadratic regression model.

#create a new variable for hours2 data$hours2 <- data$hours^2 #fit quadratic regression model quadraticModel <- lm(happiness ~ hours + hours2, data=data) #view model summary summary(quadraticModel) Call: lm(formula = happiness ~ hours + hours2, data = data) Residuals: Min 1Q Median 3Q Max -6.2484 -3.7429 -0.1812 1.1464 13.6678 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -18.25364 6.18507 -2.951 0.0184 * hours 6.74436 0.48551 13.891 6.98e-07 *** hours2 -0.10120 0.00746 -13.565 8.38e-07 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 6.218 on 8 degrees of freedom Multiple R-squared: 0.9602, Adjusted R-squared: 0.9502 F-statistic: 96.49 on 2 and 8 DF, p-value: 2.51e-06

The total variance in happiness explained by the model jumped to 96.02%.

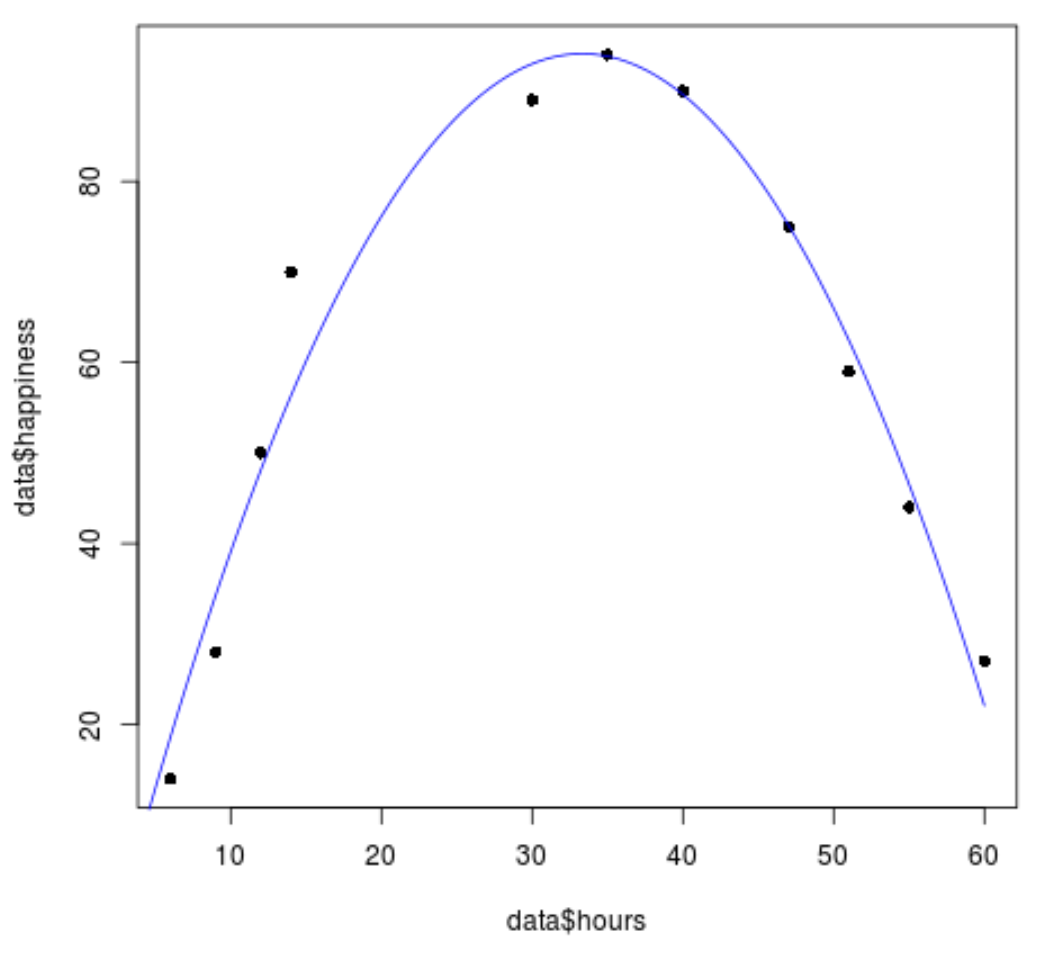

We can use the following code to visualize how well the model fits the data:

#create sequence of hour values hourValues <- seq(0, 60, 0.1) #create list of predicted happines levels using quadratic model happinessPredict <- predict(quadraticModel,list(hours=hourValues, hours2=hourValues^2)) #create scatterplot of original data values plot(data$hours, data$happiness, pch=16) #add predicted lines based on quadratic regression model lines(hourValues, happinessPredict, col='blue')

We can see that the quadratic regression line fits the data values quite well.

Step 5: Interpret the quadratic regression model.

In the previous step we saw that the output of the quadratic regression model was as follows:

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -18.25364 6.18507 -2.951 0.0184 *

hours 6.74436 0.48551 13.891 6.98e-07 ***

hours2 -0.10120 0.00746 -13.565 8.38e-07 ***

Based on the coefficients shown here, the fitted quadratic regression would be:

Happiness = -0.1012(hours)2 + 6.7444(hours) – 18.2536

We can use this equation to find the predicted happiness of an individual, given the number of hours they work per week.

For example, an individual that works 60 hours per week is predicted to have a happiness level of 22.09:

Happiness = -0.1012(60)2 + 6.7444(60) – 18.2536 = 22.09

Conversely, an individual that works 30 hours perk week is predicted to have a happiness level of 92.99:

Happiness = -0.1012(30)2 + 6.7444(30) – 18.2536 = 92.99