Table of Contents

Cohen’s Kappa is a statistical measure used to assess the level of agreement between two raters or measurement methods. In order to calculate Cohen’s Kappa in SPSS, the following steps can be followed:

1. Open the SPSS software and import the data set containing the ratings or measurements from the two raters.

2. Go to the “Analyze” menu and select “Crosstabs”.

3. In the crosstabs window, select the variables for the two raters under the “Rows” and “Columns” section.

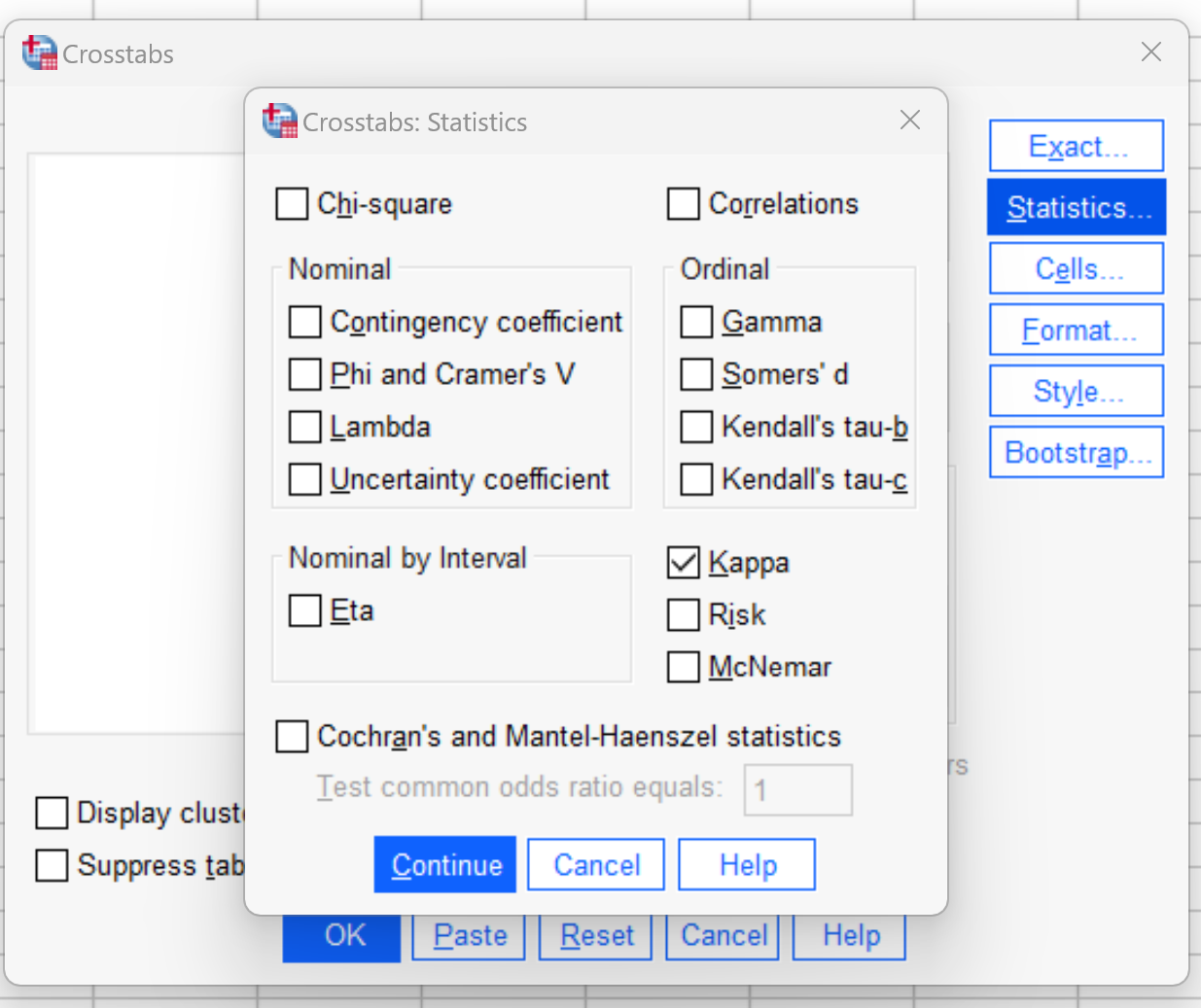

4. Click on the “Statistics” button and select “Kappa” from the options.

5. Click “Continue” and then “OK” to generate the results.

6. The Cohen’s Kappa value will be displayed in the output window, along with other relevant statistics.

It is important to note that the calculation of Cohen’s Kappa in SPSS assumes that the data follows a nominal or ordinal scale. Additionally, it is recommended to have a sufficiently large sample size for a more accurate estimation of the Kappa value.

Calculate Cohen’s Kappa in SPSS

Cohen’s Kappa is used to measure the level of agreement between two raters or judges who each classify items into mutually exclusive categories.

The formula for Cohen’s kappa is calculated as:

k = (po – pe) / (1 – pe)

where:

- po: Relative observed agreement among raters

- pe: Hypothetical probability of chance agreement

Rather than just calculating the percentage of items that the raters agree on, Cohen’s Kappa attempts to account for the fact that the raters may happen to agree on some items purely by chance.

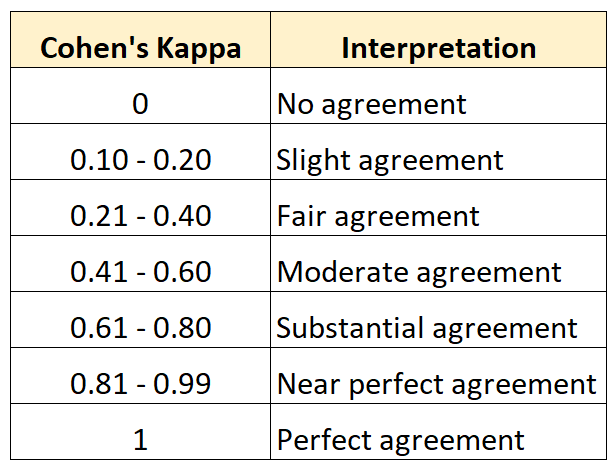

The value for Cohen’s Kappa always ranges between 0 and 1 where:

- 0 indicates no agreement between the two raters

- 1 indicates perfect agreement between the two raters.

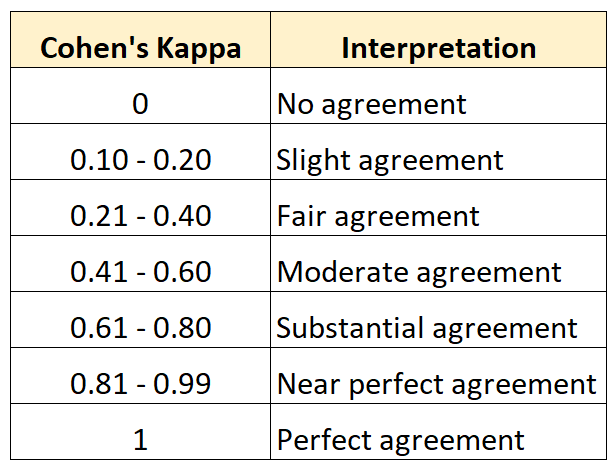

The following table summarizes how to interpret different values for Cohen’s Kappa:

The following example shows how to calculate Cohen’s Kappa in SPSS.

Example: How to Calculate Cohen’s Kappa in SPSS

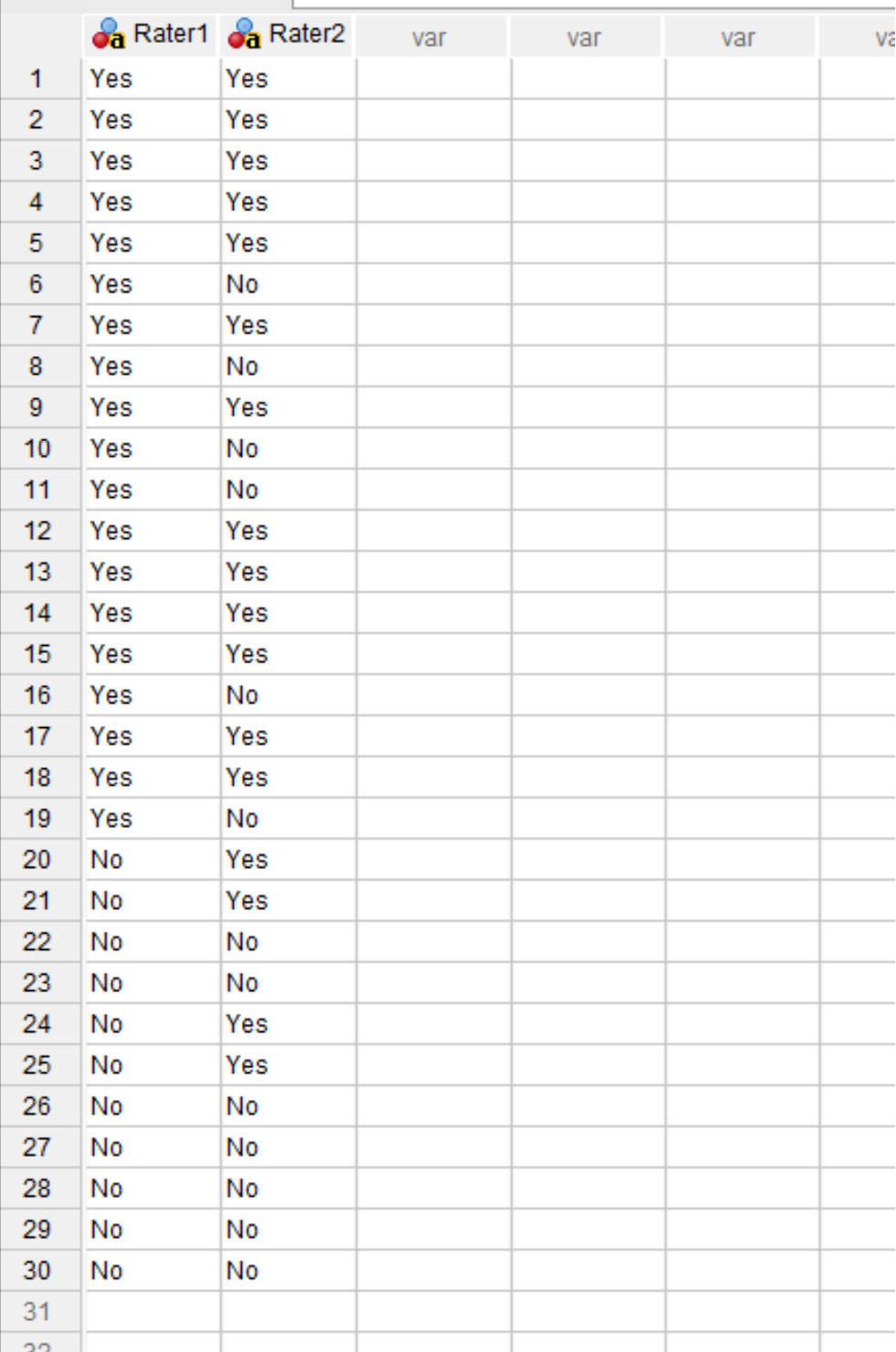

Suppose two art museum curators are asked to rate 30 paintings on whether they’re good enough to be shown in a new exhibit.

The following dataset shows the ratings given by each rater:

Suppose we would like to calculate Cohen’s Kappa to measure the level of agreement between the two curators.

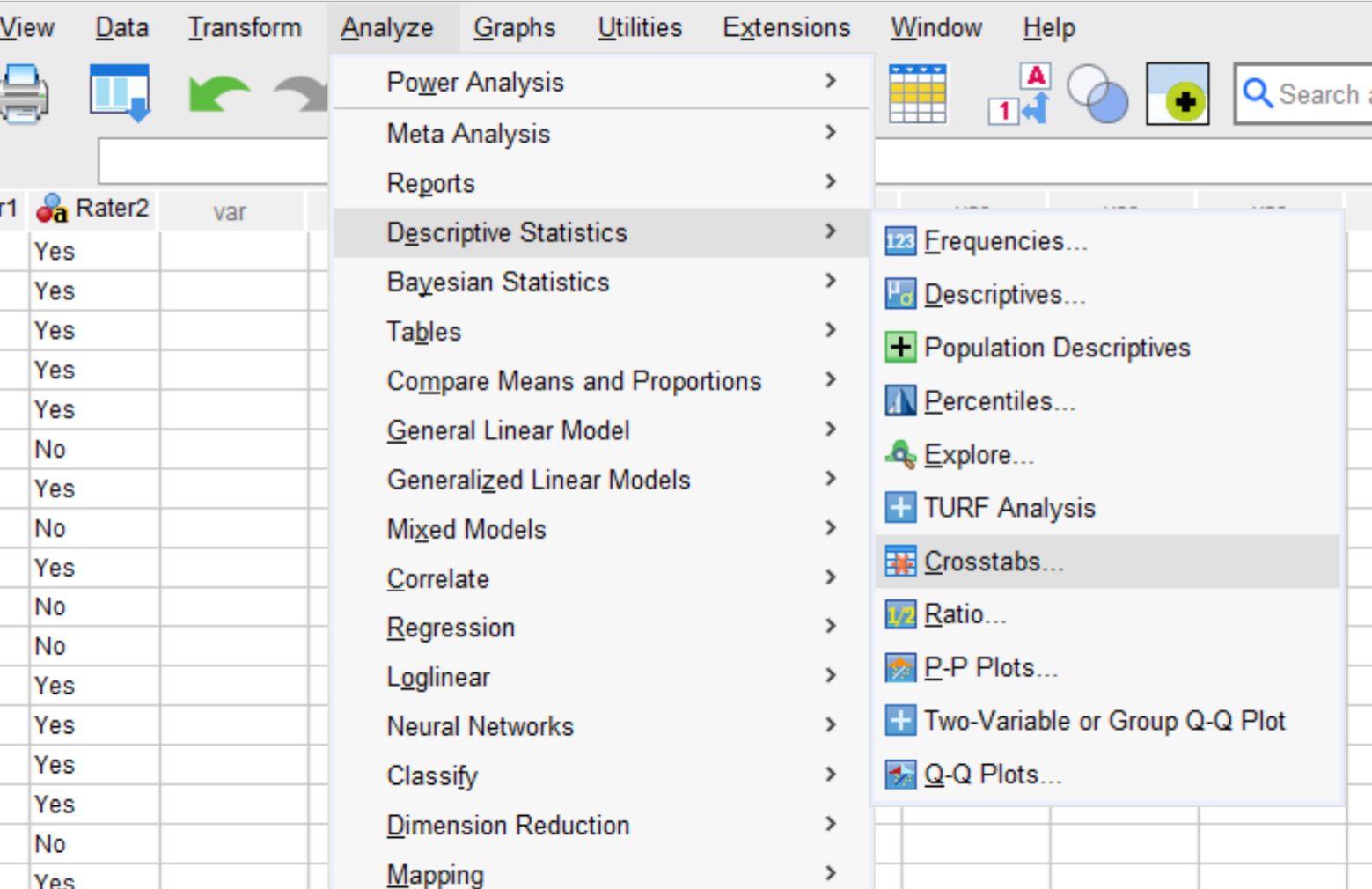

To do so, click the Analyze tab, then click Descriptive Statistics, then click Crosstabs:

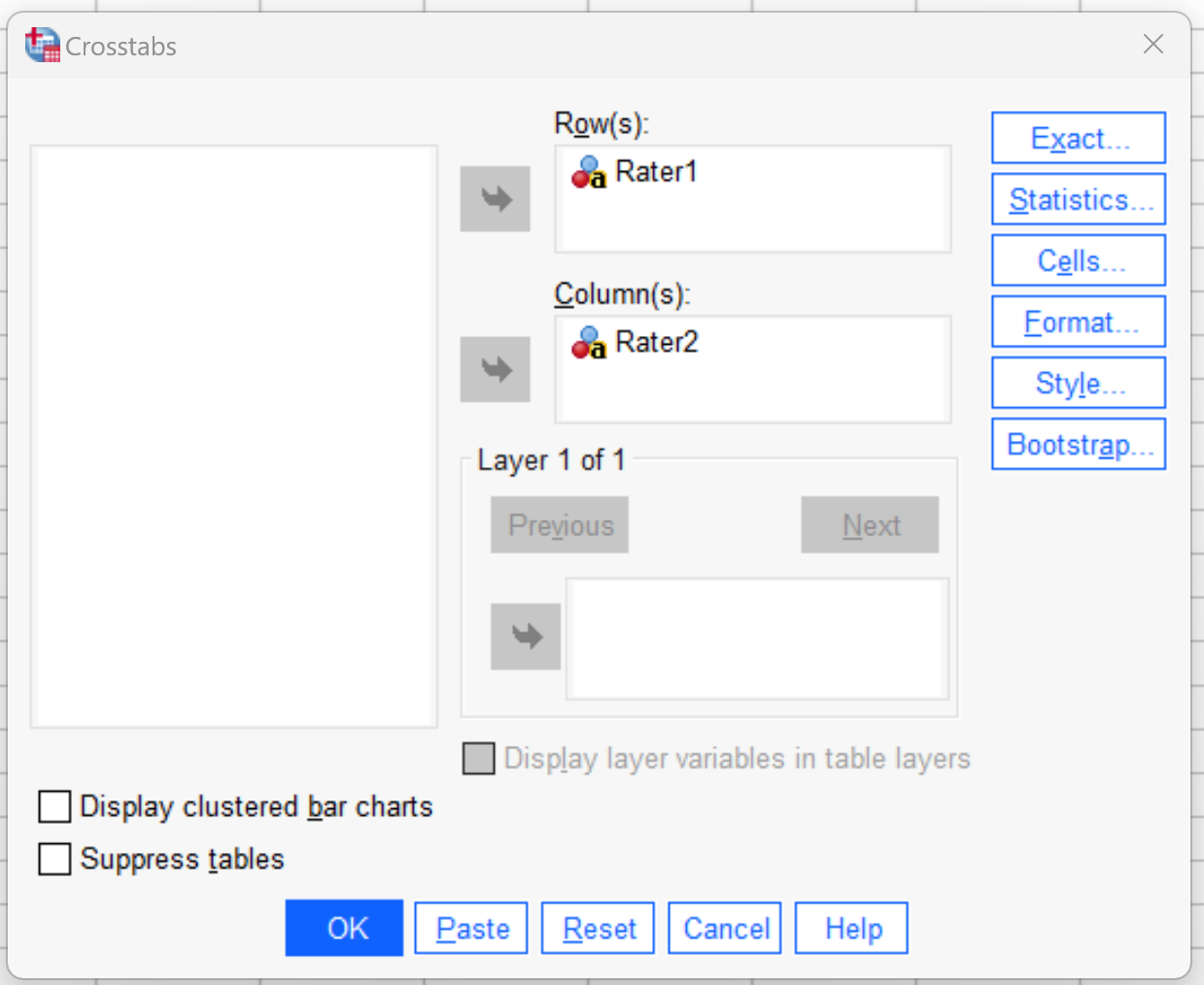

In the new window that appears, drag the Rater1 variable into the Rows box, then drag the Rater2 variable into the Columns box:

Next, click the Statistics button. In the new window that appears, check the box next to Kappa to indicate that you would like to calculate Cohen’s Kappa:

Next, click Continue. Then click OK.

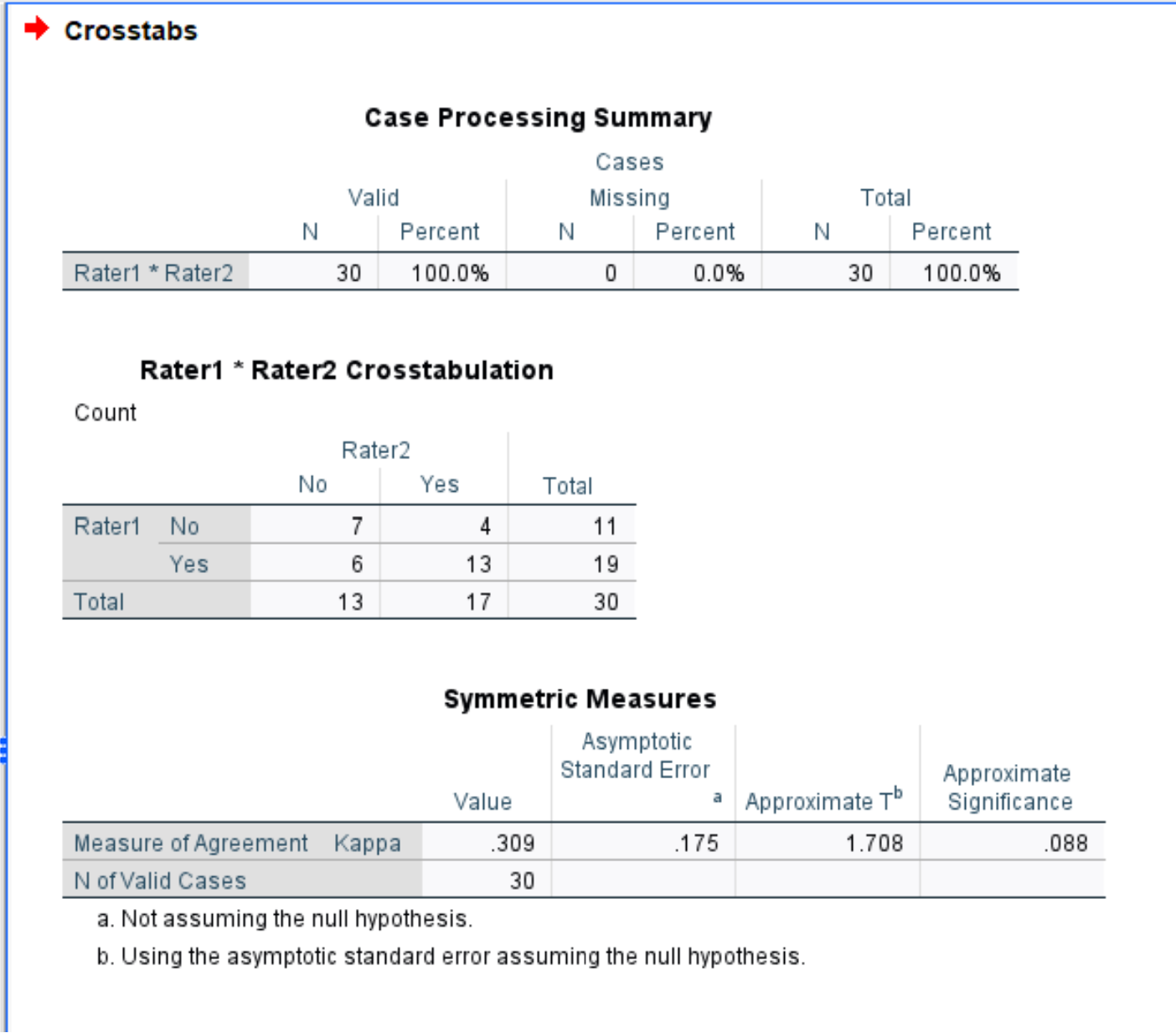

The following output will be produced:

The crosstab summarizes the number of “Yes” and “No” responses between the two raters.

For example, we can see:

- There were 7 paintings that both raters rated No.

- There were 4 paintings that Rater1 rated No and Rater2 rated Yes.

- There were 6 paintings that Rater1 rated Yes and Rater2 rated No.

- There were 13 paintings that both raters rated Yes.

In the final table in the output we can see that Cohen’s Kappa is calculated to be .309.

We can refer to the following table to understand how to interpret this value:

Based on the table, we would say that the two raters only had a “fair” level of agreement.

Additional Resources

The following tutorials offer additional resources on Cohen’s Kappa: