Table of Contents

Cohen’s Kappa is a measure of inter-rater agreement between two raters, usually used in fields such as psychology or medicine. In Python, one can calculate Cohen’s Kappa using the cohen_kappa_score function from the Scikit-learn library. This function requires two lists of ratings, one from each rater, as its parameters and returns a score between 0 and 1 that represents the level of agreement between the two raters.

In statistics, is used to measure the level of agreement between two raters or judges who each classify items into mutually exclusive categories.

The formula for Cohen’s kappa is calculated as:

k = (po – pe) / (1 – pe)

where:

- po: Relative observed agreement among raters

- pe: Hypothetical probability of chance agreement

Rather than just calculating the percentage of items that the raters agree on, Cohen’s Kappa attempts to account for the fact that the raters may happen to agree on some items purely by chance.

The value for Cohen’s Kappa always ranges between 0 and 1where:

- 0 indicates no agreement between the two raters

- 1 indicates perfect agreement between the two raters

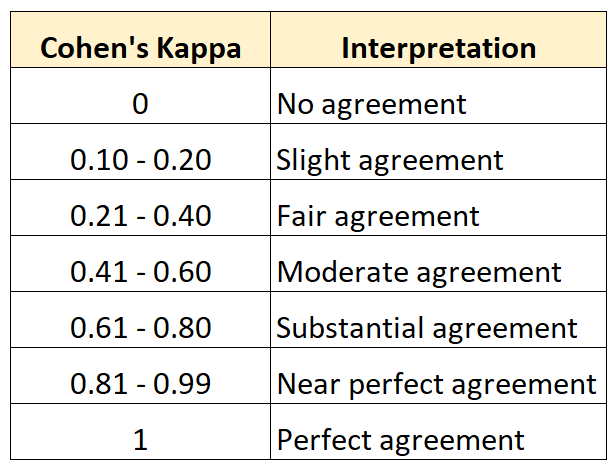

The following table summarizes how to interpret different values for Cohen’s Kappa:

The following example shows how to calculate Cohen’s Kappa in Python.

Example: Calculating Cohen’s Kappa in Python

Suppose two art museum curators are asked to rate 15 paintings on whether they’re good enough to be shown in a new exhibit.

The following code shows how to use the cohen_kappa_score() function from the sklearn library to calculate Cohen’s Kappa for the two raters:

from sklearn.metrics import cohen_kappa_score

#define array of ratings for both raters

rater1 = [0, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0]

rater2 = [0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 0, 1, 0]

#calculate Cohen's Kappa

cohen_kappa_score(rater1, rater2)

0.33628318584070793

Cohen’s Kappa turns out to be 0.33628.

Based on the table from earlier, we would say that the two raters only had a “fair” level of agreement.

You can use the function from the statsmodels library to calculate this metric.

Note: You can find the complete documentation for the cohen_kappa_score() function .

The following tutorials offer additional resources on Cohen’s Kappa: