Table of Contents

Multiple R is a measure of how well a linear regression model fits the data, and is calculated by taking the correlation coefficient between the observed and predicted values. R-squared, also known as the coefficient of determination, is a measure of how much of the variance in the dependent variable is explained by the independent variables in the model and is calculated by taking the square of the multiple R.

When you fit a using most statistical software, you’ll often notice the following two values in the output:

Multiple R: The multiple correlation coefficient between three or more variables.

R-Squared: This is calculated as (Multiple R)2 and it represents the proportion of the variance in the of a regression model that can be explained by the predictor variables. This value ranges from 0 to 1.

In practice, we’re often interested in the R-squared value because it tells us how useful the predictor variables are at predicting the value of the response variable.

However, each time we add a new predictor variable to the model the R-squared is guaranteed to increase even if the predictor variable isn’t useful.

The adjusted R-squared is a modified version of R-squared that adjusts for the number of predictors in a regression model. It is calculated as:

Adjusted R2 = 1 – [(1-R2)*(n-1)/(n-k-1)]

where:

- R2: The R2 of the model

- n: The number of observations

- k: The number of predictor variables

Since R-squared always increases as you add more predictors to a model, adjusted R-squared can serve as a metric that tells you how useful a model is, adjusted for the number of predictors in a model.

To gain a better understanding of each of these terms, consider the following example.

Example: Multiple R, R-Squared, & Adjusted R-Squared

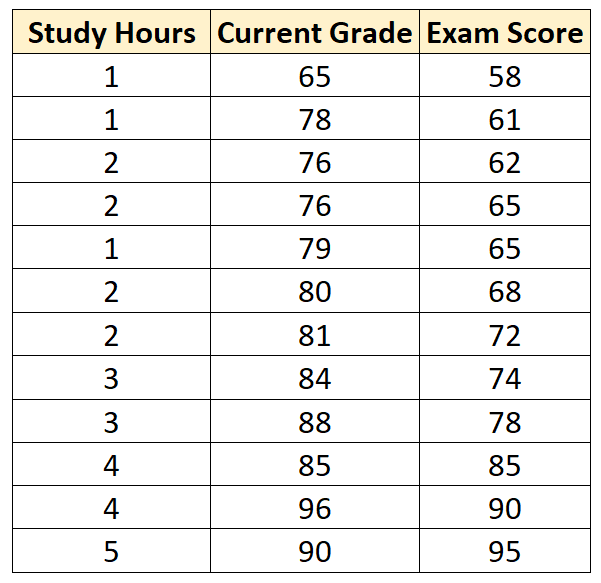

Suppose we have the following dataset that contains the following three variables for 12 different students:

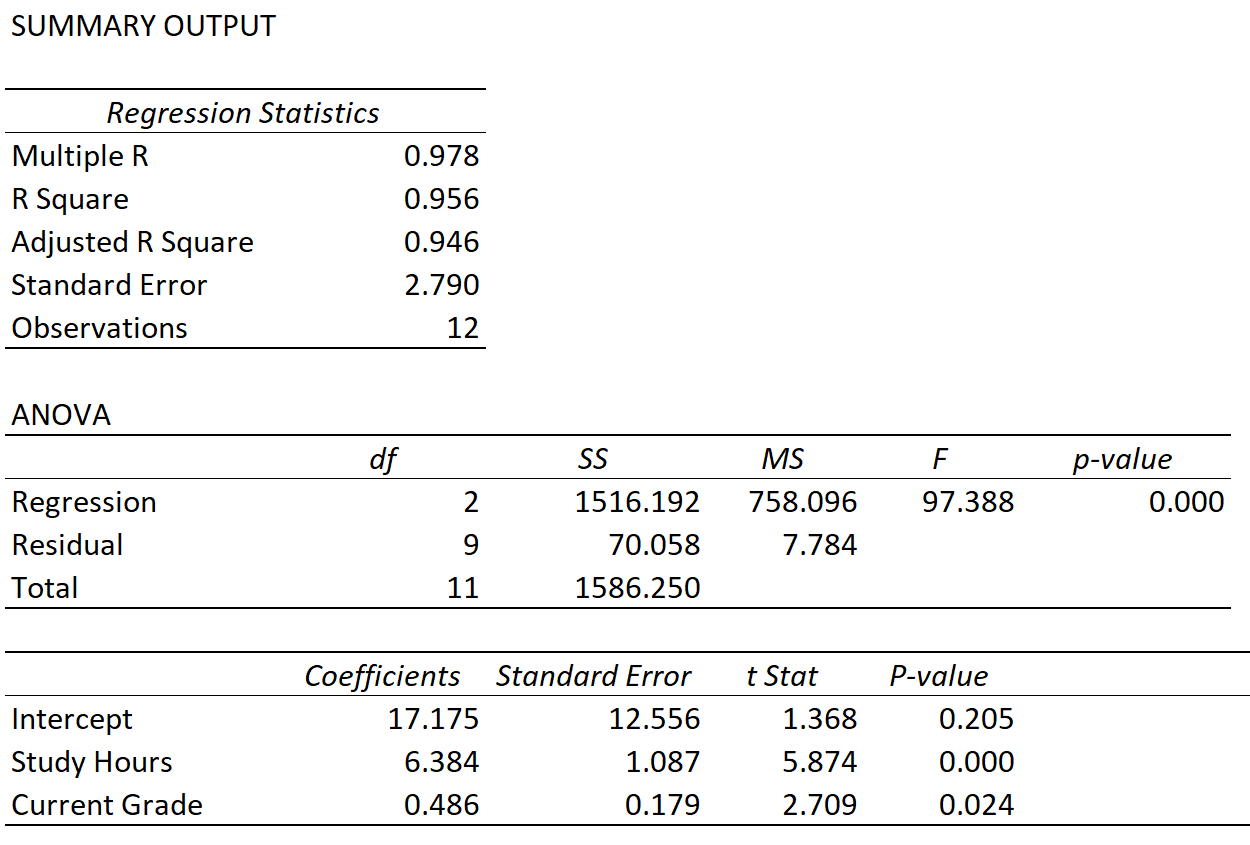

Suppose we fit a multiple linear regression model using Study Hours and Current Grade as the predictor variables and Exam Score as the response variable and get the following output:

We can observe the values for the following three metrics:

R Square: 0.956. This is calculated as (Multiple R)2 = (0.978)2 = 0.956. This tells us that 95.6% of the variation in exam scores can be explained by the number of hours spent studying by the student and their current grade in the course.

Adjusted R-Square: 0.946. This is calculated as:

Adjusted R2 = 1 – [(1-R2)*(n-1)/(n-k-1)] = 1 – [(1-.956)*(12-1)/(12-2-1)] = 0.946.

This represents the R-squared value, adjusted for the number of predictor variables in the model.

This metric would be useful if we, say, fit another regression model with 10 predictors and found that the Adjusted R-squared of that model was 0.88. This would indicate that the regression model with just two predictors is better because it has a higher adjusted R-squared value.