Table of Contents

The assumption of equal variance in statistics is the assumption that the population variances of two or more compared groups are equal. This assumption allows for valid comparison of the means of the two groups being compared. It is important to note that the assumption of equal variance must be met if a valid comparison of the means is to be made.

Many statistical tests make the assumption of equal variance. If this assumption is violated, then the results of the tests become unreliable.

The most common statistical tests and procedures that make this assumption of equal variance include:

1. ANOVA

2. t-tests

3. Linear Regression

This tutorial explains the assumption made for each test, how to determine if this assumption is met, and what to do if it is violated.

Equal Variance Assumption in ANOVA

An ANOVA (“Analysis of Variance”) is used to determine whether or not there is a significant difference between the means of three or more independent groups.

Here’s an example of when we might use an ANOVA:

Suppose we recruit 90 people to participate in a weight-loss experiment. We randomly assign 30 people to use program A, B, or C for one month.

To see if the program has an impact on weight loss, we can perform a .

An ANOVA assumes that each of the groups has equal variance. There are two ways to test if this assumption is met:

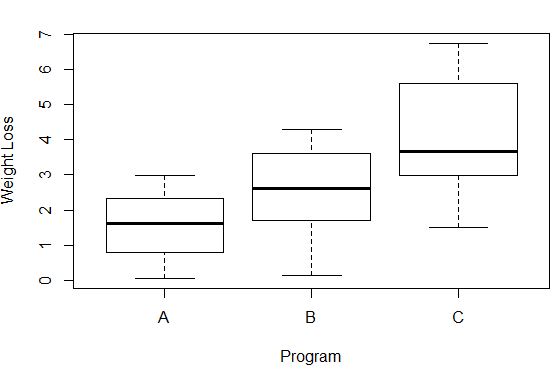

1. Create boxplots.

Boxplots offer a visual way to check the assumption of equal variances.

The variance of weight loss in each group can be seen by the length of each box plot. The longer the box, the higher the variance. For example, we can see that the variance is a bit higher for participants in program C compared to both program A and program B.

2. Conduct Bartlett’s Test.

tests the null hypothesis that the samples have equal variances vs. the alternative hypothesis that the samples do not have equal variances.

What if the equal variance assumption is violated?

In general, ANOVA’s are considered to be fairly robust against violations of the equal variances assumption as long as each group has the same sample size.

However, if the sample sizes are not the same and this assumption is severely violated, you could instead run a , which is the non-parametric version of the one-way ANOVA.

Equal Variance Assumption in t-tests

A is used to test whether or not the means of two populations are equal.

The test makes the assumption that the variances are equal between the two groups. There are two ways to test if this assumption is met:

1. Use the rule of thumb ratio.

As a rule of thumb, if the ratio of the larger variance to the smaller variance is less than 4, then we can assume the variances are approximately equal and use the two sample t-test.

For example, suppose sample 1 has a variance of 24.5 and sample 2 has a variance of 15.2. The ratio of the larger sample variance to the smaller sample variance would be calculated as 24.5 / 15.2 = 1.61.

Since this ratio is less than 4, we could assume that the variances between the two groups are approximately equal.

2. Perform an F-test.

The F-test tests the null hypothesis that the samples have equal variances vs. the alternative hypothesis that the samples do not have equal variances.

If the p-value of the test is less than some significance level (like 0.05), then we have evidence to say that the samples do not all have equal variances.

What if the equal variance assumption is violated?

If this assumption is violated then we can perform , which is a non-parametric version of the two sample t-test and does not make the assumption that the two samples have equal variances.

Equal Variance Assumption in Linear Regression

is used to quantify the relationship between one or more predictor variables and a response variable.

Linear regression makes the assumption that the have constant variance at every level of the predictor variable(s). This is known as homoscedasticity. When this is not the case, the residuals are said to suffer from and the results of the regression analysis become unreliable.

The most common way to determine if this assumption is met is to created a plot of residuals vs. fitted values. If the residuals in this plot seem to be scattered randomly around zero, then the assumption of homoscedasticity is likely met.

However, if there exists a systematic pattern in the residuals, such as the “cone” shape in the following plot then heteroscedasticity is a problem:

What if the equal variance assumption is violated?

If this assumption is violated, the most common way to deal with it is to transform the response variable using one of the three transformations:

1. Log Transformation: Transform the response variable from y to log(y).

2. Square Root Transformation: Transform the response variable from y to √y.

3. Cube Root Transformation: Transform the response variable from y to y1/3.

By performing these transformations, the problem of heteroscedasticity typically goes away.

Another way to fix heteroscedasticity is to use . This type of regression assigns a weight to each data point based on the variance of its fitted value.

Essentially, this gives small weights to data points that have higher variances, which shrinks their squared residuals. When the proper weights are used, this can eliminate the problem of heteroscedasticity.