Table of Contents

A good F1 score is a measure of a model’s accuracy and performance. It is calculated by taking the harmonic mean of precision and recall, which are measures of a model’s ability to correctly identify true positives and true negatives. An F1 score of 1 is the best possible score, meaning the model is perfectly accurate and has excellent precision and recall. A score of 0 indicates that the model is not performing well.

When using in machine learning, a common metric that we use to assess the quality of the model is the F1 Score.

This metric is calculated as:

F1 Score = 2 * (Precision * Recall) / (Precision + Recall)

where:

- Precision: Correct positive predictions relative to total positive predictions

- Recall: Correct positive predictions relative to total actual positives

For example, suppose we use a logistic regression model to predict whether or not 400 different college basketball players get drafted into the NBA.

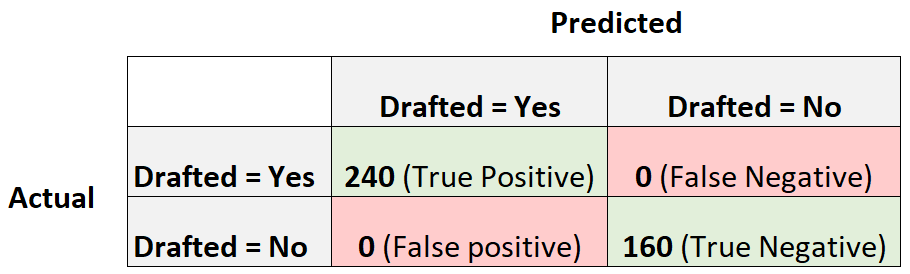

The following confusion matrix summarizes the predictions made by the model:

Here is how to calculate the F1 score of the model:

Precision = True Positive / (True Positive + False Positive) = 120/ (120+70) = .63157

Recall = True Positive / (True Positive + False Negative) = 120 / (120+40) = .75

F1 Score = 2 * (.63157 * .75) / (.63157 + .75) = .6857

What is a Good F1 Score?

One question students often have is:

What is a good F1 score?

In the most simple terms, higher F1 scores are generally better.

Recall that F1 scores can range from 0 to 1, with 1 representing a model that perfectly classifies each observation into the correct class and 0 representing a model that is unable to classify any observation into the correct class.

Here is how to calculate the F1 score of the model:

Precision = True Positive / (True Positive + False Positive) = 240/ (240+0) = 1

Recall = True Positive / (True Positive + False Negative) = 240 / (240+0) = 1

F1 Score = 2 * (1 * 1) / (1 + 1) = 1

The F1 score is equal to one because it is able to perfectly classify each of the 400 observations into a class.

Now consider another logistic regression model that simply predicts every player to get drafted:

Here is how to calculate the F1 score of the model:

Precision = True Positive / (True Positive + False Positive) = 160/ (160+240) = 0.4

Recall = True Positive / (True Positive + False Negative) = 160 / (160+0) = 1

F1 Score = 2 * (.4 * 1) / (.4 + 1) = 0.5714

This would be considered a baseline model that we could compare our logistic regression model to since it represents a model that makes the same prediction for every single observation in the dataset.

The greater our F1 score is compared to a baseline model, the more useful our model.

Recall from earlier that our model had an F1 score of 0.6857. This isn’t much greater than 0.5714, which indicates that our model is more useful than a baseline model but not by much.

On Comparing F1 Scores

In practice, we typically use the following process to pick the “best” model for a classification problem:

Step 1: Fit a baseline model that makes the same prediction for every observation.

Step 2: Fit several different classification models and calculate the F1 score for each model.

Step 3: Choose the model with the highest F1 score as the “best” model, verifying that it produces a higher F1 score than the baseline model.

There is no specific value that is considered a “good” F1 score, which is why we generally pick the classification model that produces the highest F1 score.