Table of Contents

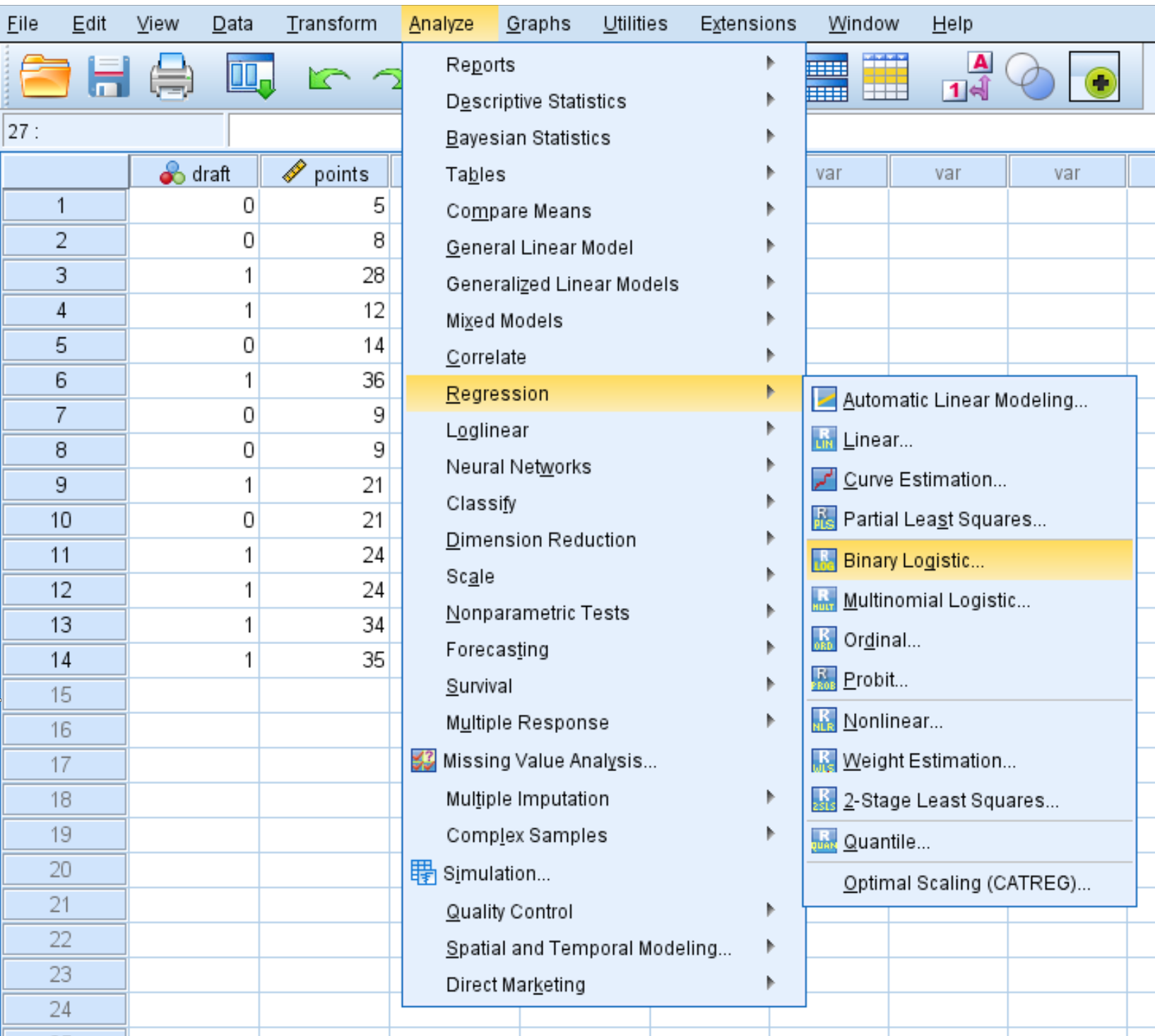

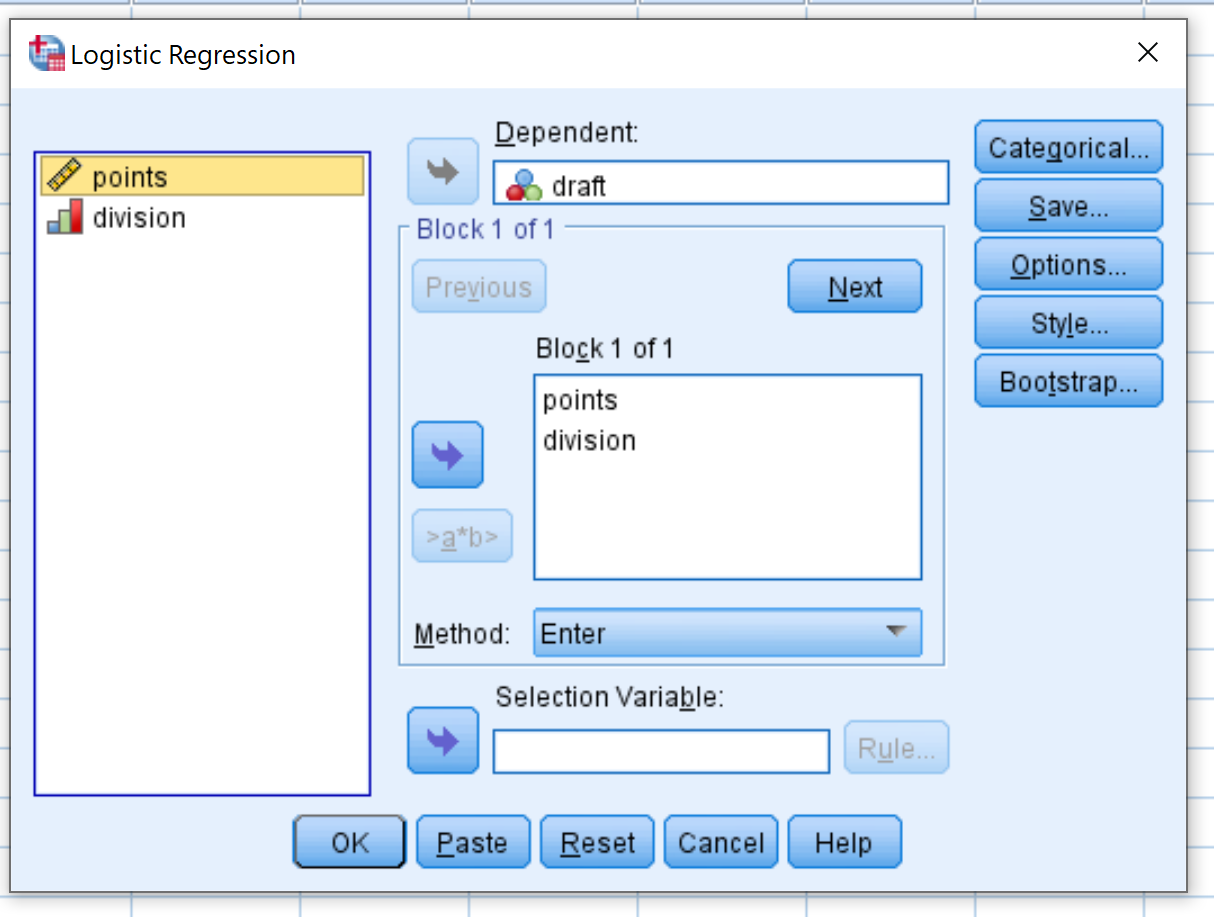

Logistic regression in SPSS can be performed by selecting the “Analyze” option in the main menu and then selecting “Regression” and then selecting “Binary Logistic” from the list of options. The dependent variable should be entered first, followed by the independent variables. After all the variables have been selected, the “OK” button should be clicked and the results of the regression will be displayed.

Logistic regression is a method that we use to fit a regression model when the response variable is binary.

This tutorial explains how to perform logistic regression in SPSS.

Example: Logistic Regression in SPSS

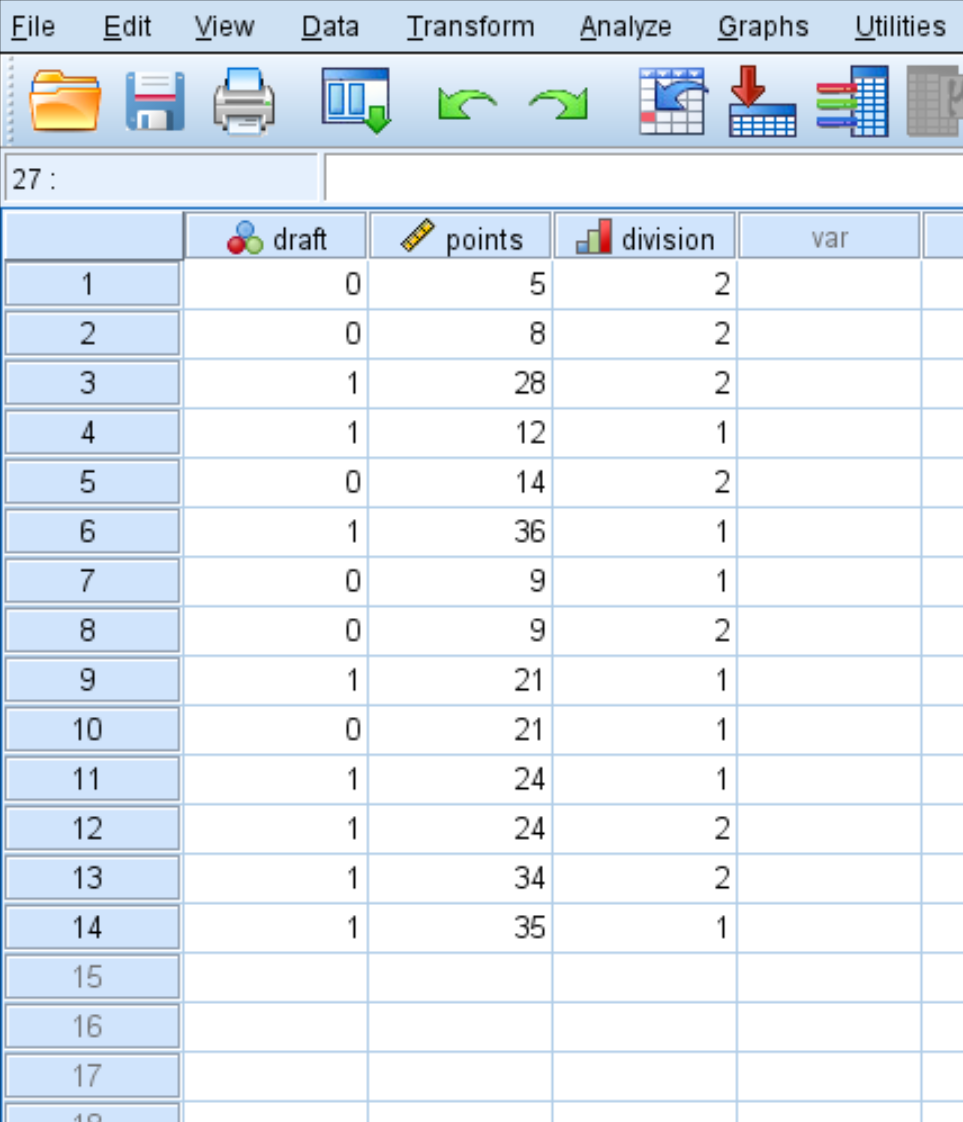

Use the following steps to perform logistic regression in SPSS for a dataset that shows whether or not college basketball players got drafted into the NBA (draft: 0 = no, 1 = yes) based on their average points per game and division level.

Step 1: Input the data.

First, input the following data:

Step 2: Perform logistic regression.

Click the Analyze tab, then Regression, then Binary Logistic Regression:

In the new window that pops up, drag the binary response variable draft into the box labelled Dependent. Then drag the two predictor variables points and division into the box labelled Block 1 of 1. Leave the Method set to Enter. Then click OK.

Step 3. Interpret the output.

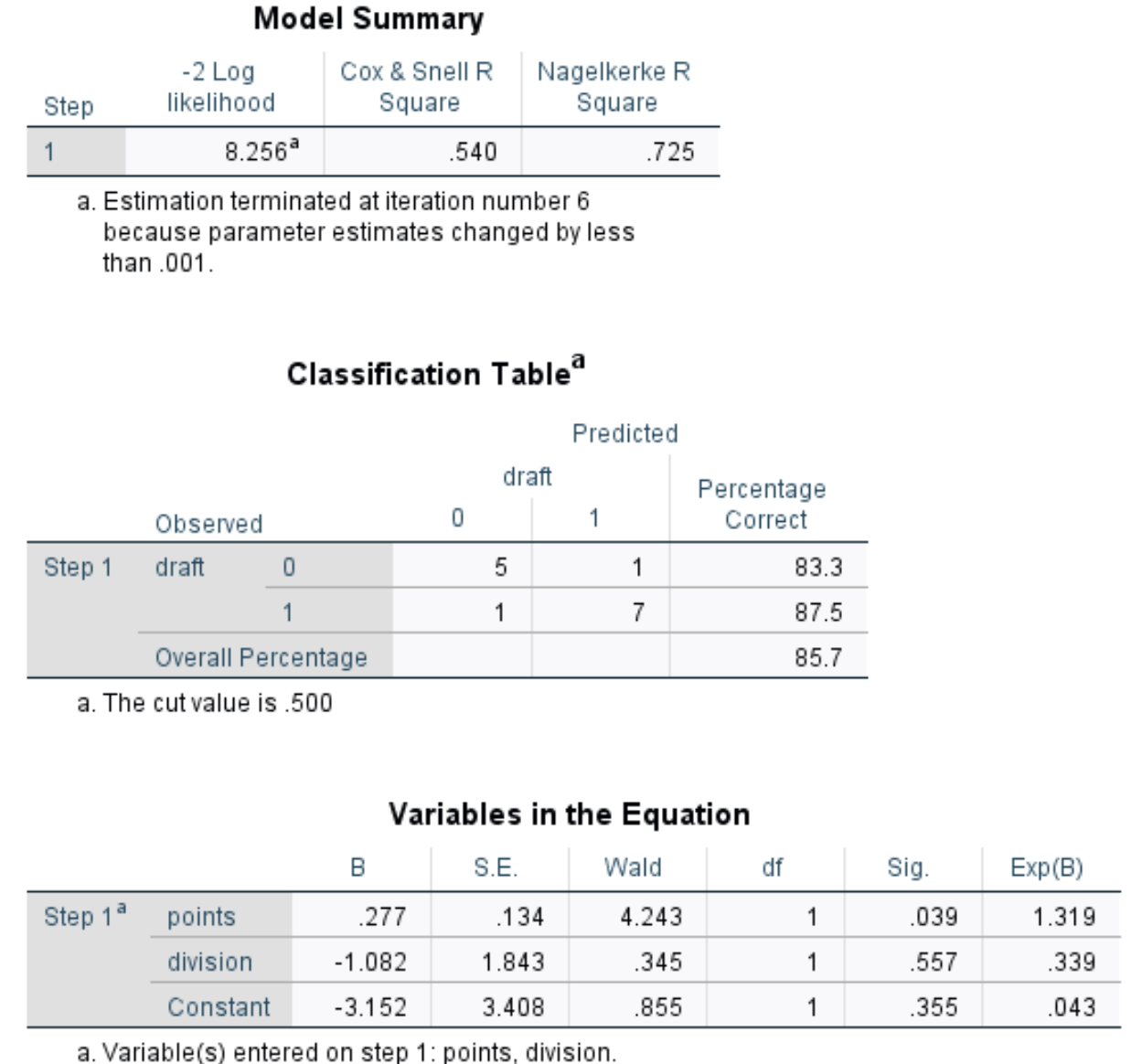

Once you click OK, the output of the logistic regression will appear:

Here is how to interpret the output:

Model Summary: The most useful metric in this table is the Nagelkerke R Square, which tells us the percentage of the variation in the response variable that can be explained by the predictor variables. In this case, points and division are able to explain 72.5% of the variability in draft.

Variables in the Equation: This last table provides us with several useful metrics, including:

- Wald: The Wald test statistic for each predictor variable, which is used to determine whether or not each predictor variable is statistically significant.

- Sig: The p-value that corresponds to the Wald test statistic for each predictor variable. We see that the p-value for points is .039 and the p-value for division is .557.

- Exp(B): The odds ratio for each predictor variable. This tells us the change in the odds of a player getting drafted associated with a one unit increase in a given predictor variable. For example, the odds of a player in division 2 getting drafted are just .339 of the odds of a player in division 1 getting drafted. Similarly, each additional unit increase in points per game is associated with an increase of 1.319 in the odds of a player getting drafted.

We can then use the coefficients (the values in the column labeled B) to predict the probability that a given player will get drafted, using the following formula:

Probability = e-3.152 + .277(points) – 1.082(division) / (1+e-3.152 + .277(points) – 1.082(division))

For example, the probability that a player who averages 20 points per game and plays in division 1 gets drafted can be calculated as:

Probability = e-3.152 + .277(20) – 1.082(1) / (1+e-3.152 + .277(20) – 1.082(1)) = .787.

Since this probability is greater than 0.5, we would predict that this player would get drafted.

Step 4. Report the results.

Lastly, we want to report the results of our logistic regression. Here is an example of how to do so:

Logistic regression was performed to determine how points per game and division level affect a basketball player’s probability of getting drafted. A total of 14 players were used in the analysis.

The model explained 72.5% of the variation in draft result and correctly classified 85.7% of cases.

The odds of a player in division 2 getting drafted were just .339 of the odds of a player in division 1 getting drafted.

Each additional unit increase in points per game was associated with an increase of 1.319 in the odds of a player getting drafted.