Table of Contents

The F-Value and P-Value in ANOVA are used to determine if there is a significant difference between the means of two or more groups. The F-Value is the ratio of the mean square between groups to the mean square within groups. The P-Value is the probability that the observed difference between the means of two or more groups is due to chance. If the P-Value is less than the significance level (usually 0.05), then the difference is considered statistically significant.

An ANOVA (“analysis of variance”) is used to determine whether or not the means of three or more independent groups are equal.

An ANOVA uses the following null and alternative hypotheses:

- H0: All group means are equal.

- HA: At least one group mean is different from the rest.

Whenever you perform an ANOVA, you will end up with a summary table that looks like the following:

| Source | Sum of Squares (SS) | df | Mean Squares (MS) | F | P-value |

|---|---|---|---|---|---|

| Treatment | 192.2 | 2 | 96.1 | 2.358 | 0.1138 |

| Error | 1100.6 | 27 | 40.8 | ||

| Total | 1292.8 | 29 |

Two values that we immediately analyze in the table are the F-statistic and the corresponding p-value.

Understanding the F-Statistic in ANOVA

The F-statistic is the ratio of the mean squares treatment to the mean squares error:

- F-statistic: Mean Squares Treatment / Mean Squares Error

Another way to write this is:

- F-statistic: Variation between sample means / Variation within samples

The larger the F-statistic, the greater the variation between sample means relative to the variation within the samples.

Thus, the larger the F-statistic, the greater the evidence that there is a difference between the group means.

Understanding the P-Value in ANOVA

To determine if the difference between group means is statistically significant, we can look at the p-value that corresponds to the F-statistic.

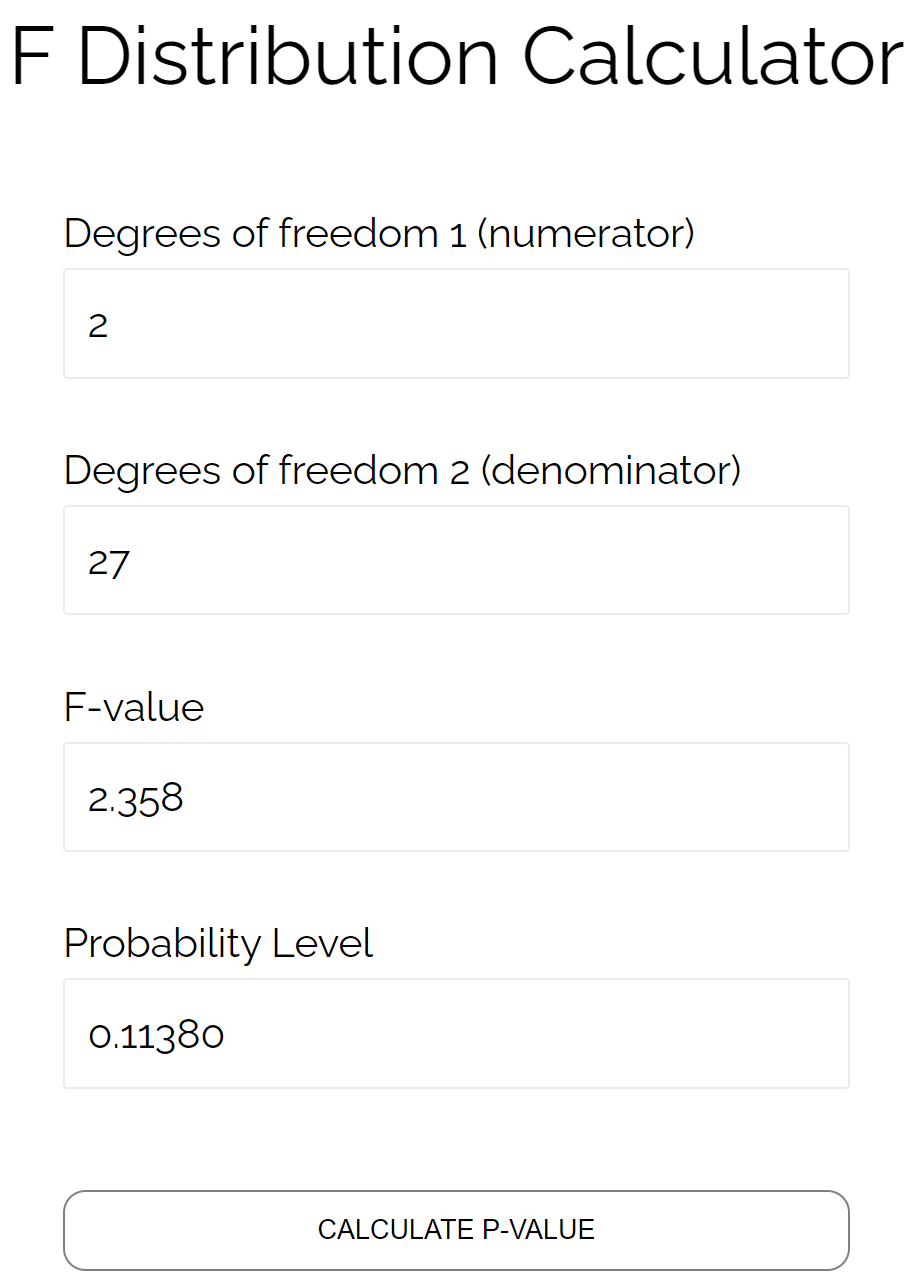

To find the that corresponds to this F-value, we can use an with numerator degrees of freedom = df Treatment and denominator degrees of freedom = df Error.

For example, the p-value that corresponds to an F-value of 2.358, numerator df = 2, and denominator df = 27 is 0.1138.

If this p-value is less than α = .05, we reject the null hypothesis of the ANOVA and conclude that there is a statistically significant difference between the means of the three groups.

Otherwise, if the p-value is not less than α = .05 then we fail to reject the null hypothesis and conclude that we do not have sufficient evidence to say that there is a statistically significant difference between the means of the three groups.

In this particular example, the p-value is 0.1138 so we would fail to reject the null hypothesis. This means we don’t have sufficient evidence to say that there is a statistically significant difference between the group means.

On Using Post-Hoc Tests with an ANOVA

If the p-value of an ANOVA is less than .05, then we reject the null hypothesis that each group mean is equal.

In this scenario, we can then perform to determine exactly which groups differ from each other.

There are several potential post-hoc tests we can use following an ANOVA, but the most popular ones include:

- Tukey Test

- Bonferroni Test

- Scheffe Test

Refer to to understand which post-hoc test you should use depending on your particular situation.

The following resources offer additional information about ANOVA tests: